There has been growing demand to ‘do something’ about online misinformation since the 2016 US Presidential election.

This demand has increased in light of Covid-19 and as this year’s US presidential election campaign heats up.

In this note I want to explore one of the practical options for ‘doing something’, which would be to set up a new misinformation regulator in the UK.

I have chosen to look at the single country regulator model to reflect the fact that a regulator would need to be empowered in national legislation, and because misinformation priorities will vary between countries.

When I talk about a ‘regulator’ here that does not necessarily mean that this would be a formal government body.

There is a well-founded nervousness in many countries about creating any kind of ‘Ministry of Truth’ where government would dictate what can and cannot be said in the public sphere.

It may be more appropriate for this work to be done by an arms-length body rather than by a formal arm of the state, for example if it were delegated by statute to an independent non-governmental organisation.

The debates in the UK over press regulation have attempted to solve for a similar challenge – can a regulator be truly independent if it is state-mandated?

I will not attempt to resolve these constitutional questions here but rather consider how a misinformation regulator might do a good job.

Processing Claims

Once a regulator has been established, its work will revolve around the processing of claims where people assert that something is misinformation and ask the regulator to intervene.

I would expect the regulator to be open to receiving claims from any member of the public (at least people resident in the UK) and we can predict that this would lead to a high volume and variety of claims.

For the purposes of this model, I imagine incoming claims as being through some kind of online form where the regulator would need to capture the following information :-

WHAT is the misinformation and what harm is it causing? WHERE is the misinformation circulating and where is the harm occurring? WHO are the targets and sources of the misinformation? WHY is the misinformation being created and distributed? HOW is the misinformation being created and distributed?

I will refer to the new regulator as “NewReg” for the rest of this post to avoid confusion with any other regulators I mention.

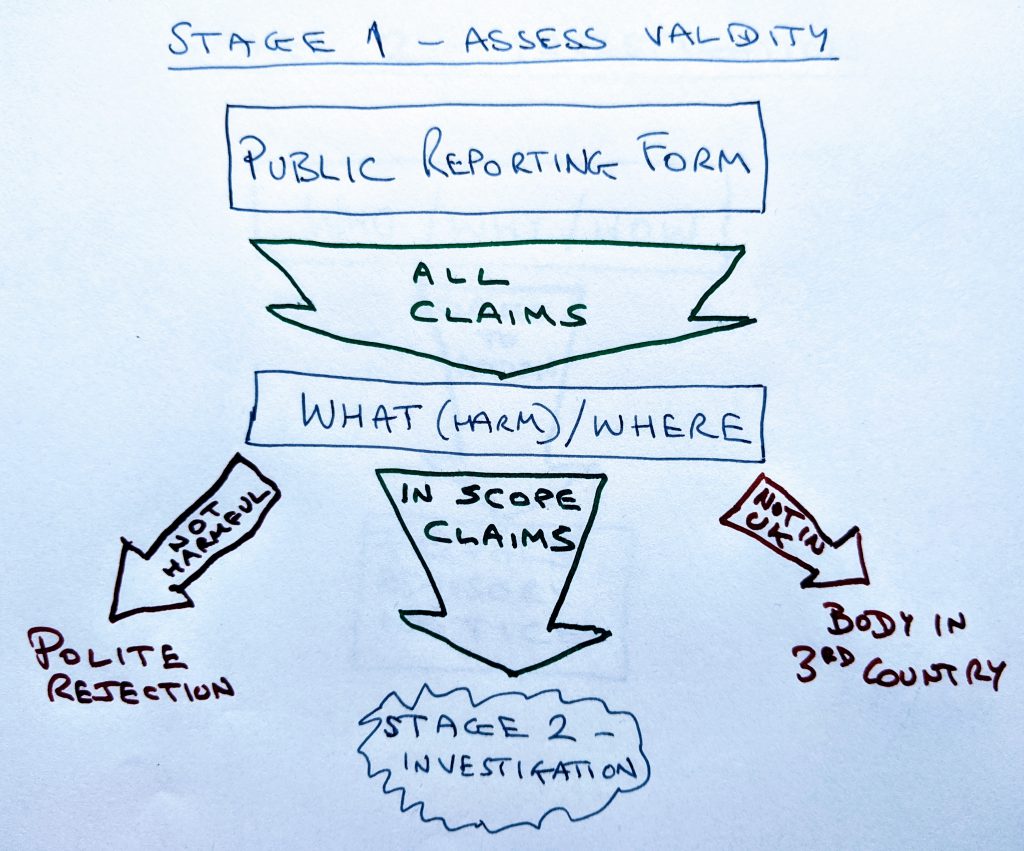

STAGE 1 – In Scope?

In Stage 1, NewReg would look at the first two questions – WHAT and WHERE – to decide whether a claim is in scope for investigation.

There is a potential chicken and egg issue – we do not want NewReg to waste time investigating claims related to content that is not misinformation, but they may not be able to make this determination without an investigation!

In practice, there will be a class of claims that are obviously not misinformation and can be dismissed quickly, with a smaller subset that may need to be passed to the investigatory phase because there is sufficient doubt.

NewReg will need to develop a clear protocol (which it should publish) for how it will resolve questions where there is doubt about whether something is or is not misinformation.

This might involve contracting with a factchecking service to provide a rapid assessment to determine if a claim is worth detailed investigation.

It is likely that assessments of some claims will be incorrect, or that they may change over time as new information emerges, and NewReg might offer a mechanism for claimants to challenge rejections.

There will be another class of claims where there are reasonable grounds to believe something is misinformation, but there is no evidence that it is causing sufficient harm to be worth investigating.

Examples of ‘harmless’ misinformation include: nonsense theories – ‘the world is flat’; religious beliefs – ‘the world was created in 4004BC’; sports claims – ‘team X were the real winners of the title’ etc.

NewReg should publish a protocol with its criteria for when it does not think that demonstrably false information causes sufficient harm to be worth investigating.

NewReg would take no further action with these claims other than sending a polite rejection to the claimant (if they have sufficient resources to do so).

If the experience of social media companies is anything to go by, there could be a significant volume of complaints in this ‘non-harmful’ category as people like to express themselves through any channel available.

The WHERE question will determine whether there is sufficient UK locus for a UK-based regulator to take something further.

Many online platforms used by people in the UK are of course global, but it would not make sense for a UK entity to try and resolve every issue anywhere in the world.

In some cases, claims may relate to matters that are important and of interest to some people in the UK but these may still not be a matter for NewReg.

For example, misinformation about the Clinton campaign in the last US election was certainly of interest to many people in the UK, and US elections matter to people here, but this may still not be a sufficient priority for a UK body to take up.

In an ideal world, there would be a network of bodies in different countries dealing with misinformation so that complainants could be redirected to someone who will take an interest if they raise issues of harm in other countries.

A particular concern here may be situations where claims relate to significant harms occurring in countries with weak rule of law and no trusted local body to address them.

It is quite proper (and praiseworthy) that people in the UK would raise concerns about harmful misinformation in, eg Myanmar and Sri Lanka, but it may not be within NewReg’s remit to deal with these directly.

A protocol for important non-UK claims might involve NewReg passing these to dedicated teams in the Foreign Office who would then take them up with relevant governments, online platforms and civil society organisations.

An alternative model would be for NewReg to have a broader remit and to involve itself in worldwide issues where these meet a certain threshold of interest amongst people in the UK, even if they are not directly affected.

Either way, it would be important to spell out geographical scope in the charter establishing NewReg to avoid later confusion about where it will and will not get involved.

So in this first stage, the regulator would have reduced the full set of incoming claims to a subset that is reasonably likely to be :- a) actual misinformation, b) materially harmful, and c) affecting people in the UK (if this is the agreed scope).

This subset of claims then passes into a second stage where more aspects of the claims will be considered.

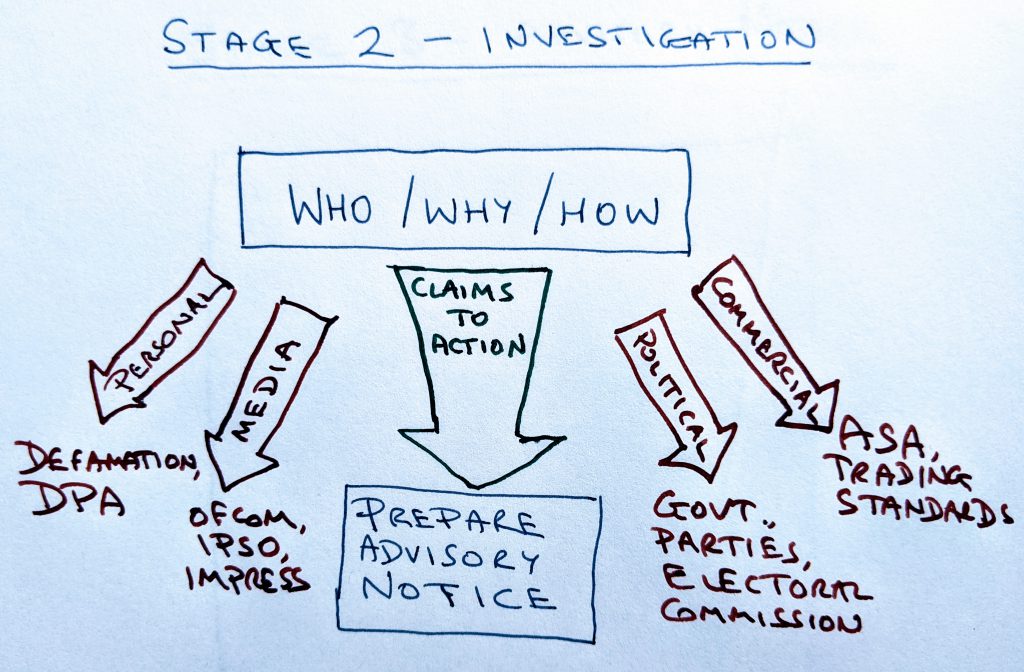

STAGE 2 – Investigate/Redirect

The operative questions for the second stage are WHO, WHY and HOW.

These questions help NewReg to understand if there is an existing body or law or regulatory authority that might take up the claims.

Some people may want NewReg to step in even where there is overlap with existing frameworks as they feel there is some deficiency in the status quo but I would strongly advocate against this.

Having NewReg get involved might provide some short term relief, but the public will not be well-served over the long-term by having multiple overlapping structures addressing the same harms.

If an existing regulator covers an area but lacks some powers to deal with today’s context, then we should resolve this through changes to the legislation rather than creating overlap with a new regulator.

I have described four classes of claim that would be redirected in the diagram above – PERSONAL, MEDIA, COMMERCIAL and POLITICAL – but there may be others that should be passed on to existing regulators following the same logic.

PERSONAL - if WHO is impacted is a private individual, the WHY is intentional damage to an individual's reputation, and the HOW is any medium, then NewReg would redirect the claimant to defamation and/or data protection law.

If the claim relates to harm to an individual rather than broader society, then we have a body of mature legislation specifically created to deal with such misinformation in defamation law.

The individual here would be advised to seek legal remedies rather than expecting the regulator to take up their cause.

They may also go to the Data Protection Agency if they believe that the law has been breached here, for example if information about them is being processed without their consent.

Claims about individuals may also have elements of the other classes, eg being made in the MEDIA or with POLITICAL intent, and NewReg might also suggest other forms of redress if this the case.

There may be very sympathetic cases in this class where private individuals are suffering real harm and this might create significant pressure on NewReg to take up their cause.

A model where NewReg does take up some private individual harm claims is certainly possible, but this should be worked through and made clear at charter stage rather than allowed to happen in response to hard cases.

MEDIA - if WHO is a regulated media outlet, the WHY is a journalistic purpose, and the HOW is a publication that accurately identifies itself, then NewReg would redirect these claims to the relevant media regulator.

NewReg would redirect any claims that relate to regulated media outlets to Ofcom (broadcast), IPSO (most national newspapers and magazines) or IMPRESS (mainly local press and websites) as appropriate.

Ofcom has well-established protocols for looking into claims related to misinformation on broadcast media and people are used to registering complaints with them including for content shared online by broadcasters.

The effectiveness of press regulators like IPSO and IMPRESS is more contentious, but they do impose accuracy standards on their members and are able to order publications to make corrections.

If there are concerns that these regulators are not sufficiently agile or robust in dealing with misinformation in the media they oversee, then they should be challenged on this rather than having NewReg create a parallel regime.

There is an open question about how to define a ‘media’ outlet in the online space, but in the case of these referrals this is a matter of fact, ie you are a regulated media outlet if are on a list maintained by a regulator.

Where a claim relates to misinformation being promoted by an entity that claims to be a media outlet but is not under the oversight of a UK regulator, then no referral could take place and it would be for NewReg to intervene.

COMMERCIAL - if WHO is a business, and the WHY is commercial gain, then NewReg would redirect them to the the Advertising Standards Authority (if the HOW involves paid promotion) or to Trading Standards Authorities (for any misleading business practices).

Where somebody is selling goods and services then they usually have to comply with a wide range of regulation relevant to their sector.

It is normal for there to be specific requirements in terms of providing accurate information to customers, from weights and measures through to returns on financial products.

There may be a wide range of sector-specific regulators could take up claims that a business is promoting misinformation including Trading Standards Authorities.

In the case of advertising in the UK, this is overseen by a dedicated regulator, the Advertising Standards Authority which has accuracy as a key standard.

It may be hard to apply the full range of penalties to sources of commercial misinformation in another jurisdiction, but where they are targeting customers in the UK then these existing UK-regulators will have a role to play,

POLITICAL - if the WHY is political, then NewReg would redirect the claim, depending on the WHO and HOW, to relevant parts of government, the Electoral Commission, or to the political parties themselves.

This may be the most contentious of the suggestions I make here for redirecting claims.

The use of misinformation in a political context is one of the most discussed concerns people have because it is seen as undermining democracy,

It is also one of the most sensitive issues for platforms and regulators to address because of fears that any intervention they make might undermine democracy in a different way.

The challenge is how to right the wrong of political misinformation without this becoming two wrongs as both the original content and the platform/regulator response are seen as inappropriately partisan.

The UK has an existing framework for regulating political campaigns and elections and a dedicated regulator in the Electoral Commission.

The most orderly approach would be for NewReg to refer claims about misinformation being used in political campaigns to the Electoral Commission.

People close to this subject will point out that there are significant gaps in the current regulatory framework in respect of both misinformation and online campaigning more generally.

This is true and there are some good proposals being circulated by organisations like WhoTargetsMe to address those gaps.

The priority for UK policy makers should be to make progress in updating the relevant legislation and this should be a faster process than the one that may lead to the creation of a new misinformation regulator.

So I am assuming that by the time NewReg exists, the Electoral Commission will have an enhanced mandate to deal with claims that NewReg sends to them.

What we should absolutely NOT do is delay proper reform of electoral law further by relying on sticking plaster solutions like having someone else deal with political misinformation.

As well as claims about misinformation in election campaigns, we can expect people to raise questions about government information.

This can happen in both directions, with government either accused of misleading the people, or government believing it is a victim of misinformation being spread by its opponents.

I would again recommend that we do not expect NewReg to resolve claims where the core dispute is a political one about the actions of the government.

There is a range of accountability mechanisms that can consider whether government is guilty of using misinformation including formal complaints under Ministerial and civil service codes and Parliamentary scrutiny,

The media and factcheckers also investigate claims about government misinformation as part of their normal business so there would be scrutiny even if NewReg does not actively take claims up.

Similarly, if government claims that people are using misinformation to undermine it politically, this would be for the government themselves to respond rather than being a matter for NewReg.

It would undermine NewReg’s independence if it were seen to be a tool for defending the government’s reputation, and government is anyway big and ugly enough to fight its own corner.

[NB there is a wider debate to be had about how governments are defending themselves online, and whether this is in a public service spirit or more of a partisan political (and therefore inappropriate) one].

Covid-19 Examples

We can bring this to life with some examples of claims that might have been made if NewReg were in existence as we live through the Covid-19 crisis.

PERSONAL – there have been various allegations made about how individuals behaved during the lockdown, eg where they have travelled or met with other people outside their households.

Where people feel that misleading information about someone’s actions is circulating, either in attack or defence of them, this might lead to claims being made asking NewReg to intervene.

NewReg should not become involved in disputes like these over whether somebody did or did not do something specific in their private lives, it is rather for that person to decide how to address the situation.

They may use the media to set the record straight, or pursue defamation claims where they judge this to be appropriate.

Where the misinformation is being promoted by bodies that are overseen by a regulator, eg newspapers, then they may also pursue claims with those bodies.

MEDIA – media outlets in the UK have largely been consistent in promoting accurate information about Covid-19, but there have been some issues that were referred to regulators.

Notably, we saw Ofcom take rapid decisions in April 2020 following complaints about Covid-19 misinformation on broadcast channels they oversee.

The remedy imposed on London Live TV in one of the decisions included requiring them to broadcast the outcome of the complaint in a form chosen by the regulator.

This is a good example of how the system could continue to work with NewReg in place, where they would redirect claims to media regulators so they can use their powers of sanction and correction.

POLITICAL – there has been criticism suggesting that announcements by the UK government were misleading, particularly over their progress with testing.

People might register claims with NewReg that statements by the Health Secretary contained inaccurate figures and are therefore misinformation.

NewReg should not take up these claims directly but rather refer them to relevant parts of the government.

Notably, the UK Statistics Authority did look into questions about numbers of tests and entered into a public debate with the Health Secretary.

The government also felt themselves to be victims of misinformation during the crisis and have been responding robustly through their own social media accounts.

NewReg would have been a good vehicle to take up many of the misinformation issues that a government unit is currently managing in relation to inaccurate health advice.

But it should not have to pursue claims when the government is concerned about political embarrassment rather than a broader public interest, such as a false claim about nurses treating the Prime Minister.

COMMERCIAL – this has been a busy time for a number of regulators in the UK as they deal with complaints about businesses trying to profit from the crisis.

A series of rulings from the Advertising Standards Authority (ASA) shows us how this might work if NewReg redirected relevant complaints to them,

The ASA published a statement explaining how its rules applied to Covid-19 and it has issued rulings on ads containing a number of different inaccurate claims about products and Covid-19.

NEWREG – having redirected the claims which are within the domains of other regulators, this would still leave NewReg with plenty of other claims to address in the context of Covid-19.

One that stands out is the false conspiracy theory that 5G causes Covid-19, and I will use this as example of how NewReg might proceed where something is in scope.

STAGE 3 – Preparing A Response

The principal tool that NewReg would use is to issue an Advisory Notice describing a type of actionable misinformation, with examples, and a range of mitigation measures that online services should put in place.

NewReg would analyse the information they have collected, especially on WHO is responsible for the misinformation and HOW they are spreading it, and use this to make a determination about the right MITIGATION.

The MITIGATION recommendations will usually involve a mix of three different elements – REDUCTION, CORRECTION and PREVENTION.

REDUCTION = efforts to make sure fewer instances of the misinformation in question exist including both deletion and reduced distribution.

CORRECTION = methods to ensure people have an accurate version of the false claims in the misinformation.

PREVENTION = tools that make it less likely the misinformation will be published or discovered.

This may feel wishy-washy to those who want there to be robust orders against platforms with heavy sanctions for failure to comply.

But there is a fundamental problem with seeking to apply sanctions for failure to deal with content that is not itself illegal.

Where a country has made a particular form of speech illegal, eg hate speech, then takedown orders can be issued in respect of that content and heavy sanctions applied where these are not respected.

This will apply to some forms of misinformation, eg court orders can insist on the removal of defamatory content, or content that breaches election law.

But for a lot of misinformation, it would be inconsistent with human rights principles to render this speech illegal – it may be ‘bad’ but it is not bad enough to justify legal prohibitions.

NewReg will therefore be in a position of asking platforms to take actions against legal speech rather than issuing something like a court-ordered takedown notice.

Readers who work in cybersecurity may hear an echo of the Advisory Notice system used by organisations around the world that are colloquially known as ‘CERTs’ and this is intentional.

One way to think of misinformation is that it is a ‘bug’ in the information ecosystem that needs to be analysed and responded to like a bug in hardware or software.

NewReg’s role is to produce a definitive public statement of what the harm is and what the UK authorities want to see done about it.

The act of creating an advisory notice takes us from ‘something must be done’ to ‘this is what responsible parties should do’.

A system of public advisory notices provides a good framework for transparency and accountability.

We can see how NewReg is balancing freedom of expression and harm based on their expert view of the equities for British society, not those of the platforms, and we can challenge them if we think their judgement is wrong.

And we can make judgements about the services being offered by platforms according to how they respond to notices.

[NB this would require platform responses to notices to be transparent and NewReg will likely need a power to insist on responses where these are important and not already being volunteered].

There are intentional similarities with the system of cybersecurity advisory notices where organisations are not forced to apply patches but there is strong industry and reputational pressure to do so.

A NewReg Advisory Notice

I will close this post with an example of what an advisory notice might look like for the 5G Covid-19 conspiracy.

This is illustrative as I do not have the resources to do all the research that would go into an actual definitive notice, but I hope helps bring to life how the NewReg that I have described in this post might make a difference.

MISINFORMATION ADVISORY NOTICE DATE: 4th July 2020 SUBJECT: 5G and Covid-19 DESCRIPTION: various claims are made in writing and graphical form that there is a link between radiation from 5G masts and Covid-19. Exemplars of the text used, keywords and common images can be found at [LINK TO PUBLIC DATABASE OF ANALYSED AND SORTED CONTENT]. HARM: there is an immediate threat to people and property in some cases as supporters of the conspiracy threaten direct action against phone companies. The conspiracy may cause more general risks to the public interest in the areas of health and technology. It undermines the public health strategy against Covid-19 which depends on people understanding that it is social interactions (not mobile phone signals) that spread the disease. The UK is committed to rolling out 5G technology and the conspiracy may slow that process delaying the benefits of 5G reaching people and businesses for no good reason. MITIGATION: we have seen rapid take-up of this conspiracy theory and its association with other misinformation campaigns, eg on vaccination. We advise that this is a high priority for urgent mitigation measures. We expect regulators to take robust action where the conspiracy is being propagate in regulated entities and contexts. We recommend that online platforms take the following measures against individuals or organisations promoting the conspiracy on their services. We advise REMOVAL of any content that includes physical threats against people or property associated with deploying 5G. There have already been instances of attacks demonstrating that threats are credible and so removal is justified. We do not advise the removal of content where people are discussing the supposed risks of 5G without any associated threats of violent actions. In these cases, we recommend a CORRECTION approach and advise platforms to direct users to this recommended content - [NOTICE LINKS TO FACTCHECKER CONTENT IN A RANGE OF FORMATS TO SUIT DIFFERENT PLATFORMS, eg FullFact long-form article at https://fullfact.org/health/5G-not-accelerating-coronavirus/] We advise that the distribution of content associating 5G with Covid-19 is reduced in feed-based services. We also advise that search and discovery mechanisms are adjusted so that content advancing the conspiracy is not returned. We recognise that these preventive measures may reduce the distribution of content challenging the conspiracy as well as content promoting it. We have determined that this is a reasonable trade-off in this case. [LINK TO ANALYSIS OF OVERALL NATURE OF CONTENT] REVIEW AND ONGOING WORK: We intend to review this advice in a period of ONE month. We have received claims that there are some state-sponsored promoters of this conspiracy and are currently investigating these claims. We may provide additional urgent guidance if this analysis confirms these links.

Summary :- I explore how a new UK misinformation regulator might do its work. I consider the relationship with other existing regulatory frameworks. I look at some examples of Covid-19 misinformation. I describe a model of Misinformation Advisory Notices.