[Note for new readers:- these posts are written by someone who spends a lot of time working on internet regulation. If you are a non-specialist reader you may feel you are being thrown in at the deep end as I assume prior knowledge of the subjects I cover. To give everyone more context I will try to include links to relevant background material and would encourage you to follow these. And I explain more about me and this blog on the About page.]

The Question

As we observe how internet platforms are managing information related to Covid-19, this prompts questions about their approach to misinformation more broadly.

We see platforms actively promoting “good” information and suppressing – either through removal or reduced distribution – “bad” information.

The producers of the good information and the assessors of the bad information are 3rd parties so there is an argument that platforms are technically still not acting as the ‘arbiters of truth’.

But this distinction feels tenuous when platforms are (intentionally) using such heavy hands – both visible and invisible – to steer people towards content that the platforms agree is true.

We have also seen platforms remove some content from President Bolsonaro of Brazil and this seems to go against the notion that there is an overriding public interest in not removing statements by political leaders.

So, does this mean that the ground has shifted and platforms are now more willing to be the arbiters of truth? And should we expect them to take more action against the speech of politicians?

The short answer to both questions is “maybe a bit, but there are still important constraints” and the longer answer is what I will explore in a couple of posts.

Disclaimer

I want to add a disclaimer as I am now starting to write about matters that are clearly significant for Facebook, my employer until the end of 2019.

This is in three parts.

First, I like and respect the people who work at Facebook and those I know from other similar internet platforms.

They are not perfect and do not get everything right but I remain of the view that they are well-intentioned and doing as good job as anyone could in dealing with some massive challenges.

I therefore consider myself to be anything but a Prodigal Techbro.

Second, I do not have any secret information to share about policy and decision-making in respect of misinformation at Facebook.

The most significant internal debates have already been aired in public, either formally or through extensive leaks.

Even if I could remember some “he said” or “she said” details from old work conversations I do not think they would add much to our understanding of what we need to do in the future.

So I plan to write as an outsider trying to bring relevant experience to bear after reflection and at a (albeit still short) distance, rather than claiming to provide an insider’s perspective.

Third, I continue to own Facebook stock though I no longer receive any remuneration directly from the company.

I do not expect my musings to have any impact on the Facebook stock price in either direction but I would clearly benefit if it does go up so, yes, I have a financial interest in their success.

The Facebook stock price is not what is motivating me to write this but I can only ask you to accept my word for that.

Now we have established that I am a fanboy with a financial interest in big tech who has no intention of recanting or blowing whistles, some of you may decide to stop reading.

I hope you will rather stick with it and see for yourself whether even such a deeply compromised individual may have something interesting to say.

That’s more than enough about me, now onto the meat of answering the questions posed by the Covid-19 response.

Re-definition

If we want to understand why platforms make the decisions they do about acting on misinformation then we can start with how they characterise their own position.

A common framing of this is that platforms do not want to be the ‘arbiters of truth’ but I think this is becomes more accurate if we insert an additional word.

That word is ‘partisan‘ (as the sharp-eyed amongst you will likely have already figured out from the title of the blog post).

What platforms really do not want is to become “partisan arbiters of truth”.

A description of how things works today naturally leads to a discussion about whether this is right or whether other models should be preferred.

This post is long enough just fleshing out the description so I will write a separate post on the pros and cons of different long-term models.

And I will follow that with a consideration of how regulation might eventually impact on what platforms do and how decisions by platforms might in turn affect the regulatory debate.

Evolution or Revolution

The recent actions on Covid-19 by internet platforms have been extraordinary in terms of scale and speed.

But we can find comparable situations where there has been a public safety crisis and a need to direct people to accurate information about how to get help, for example following a natural disaster or a terrorist attack.

A defining characteristic of information provision during disasters is that it is generally not politicised and this creates a relatively straightforward environment in which platforms can take action.

The Covid-19 response is to an extent an evolution of the disaster responses that have rolled out on platforms over the years with little controversy or attention.

With Covid-19 there is a very broad political consensus in most countries on what is happening and what needs to be done.

This means that there is a single truth, at least around the core public health response, rather than multiple competing partisan positions.

This of course does not apply to discussions about how well governments are responding to Covid-19 which is highly partisan.

There are also some examples of partisan disagreement over specific solutions but these tend to be at the margins while the main corpus of information about prevention and seeking medical help remains uncontested.

We can also find non-disaster scenarios where platforms have again shown themselves willing to intervene quite substantively to promote true information.

For example, it is common for platforms to promote accurate information about polling places and dates and remove content that has false claims about where and when to vote.

This is highly political but generally not partisan.

Even where politicians have a private interest in reducing turnout, they are unlikely to claim that misleading voters on the mechanics of voting is OK and make this into a partisan issue.

This helps us to understand that it is not politics per se which causes angst for platforms but rather the set of political issues that have become partisan.

Finally, there is an obvious antecedent in how platforms have dealt with anti-vaccination content which I will explore in detail in the next section as it is instructive about what happens when health advice becomes partisan.

Decision Framework

Platforms need a framework for making decisions about the extent to which they should arbitrate truth, either directly or through working with 3rd parties.

They will have a set of policies and rules that are usually made public at least for larger and more longstanding platforms.

And they will often have access to internal and external subject matter experts and a considerable body of ‘case law’ to help guide specific decisions.

I want to step back from specific policies and decisions and describe the factors that shape those decisions.

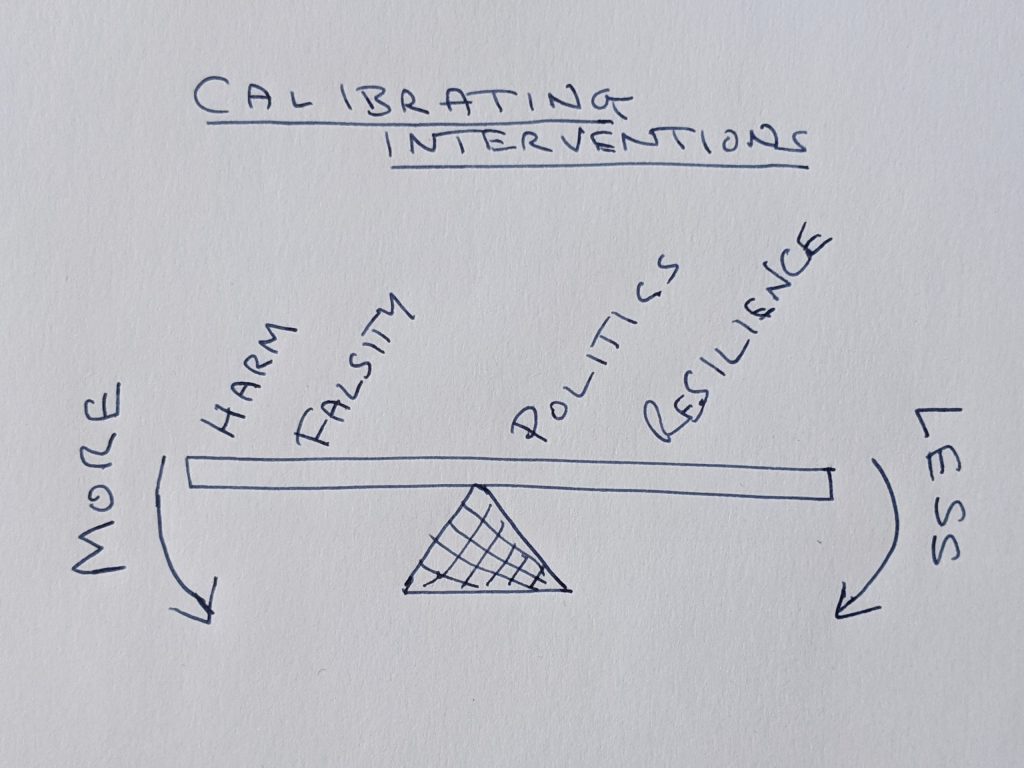

Jumping straight in, the following sketch sets out some factors and how they impact on decision-making.

Let’s unpick this now.

As will become clear, the four factors I am going to describe all involve the application of a significant degree of judgement – this model will not help those looking for bright line decisions.

On one side of the balance, we have two factors that act as drivers towards platforms intervening.

Falsity is the extent to which something is demonstrably wrong and in this model it does come in degrees.

Harm encompasses a wide variety of damages to people and property and there may not be consensus around all of these.

The focus has naturally been on these two factors in the misinformation debate and we can build models where either or both of these are the only considerations.

Platforms might decide to suppress content once it reaches a certain level of Falsity or Harm (in their judgement) or some combination of the two.

For example, Facebook has a harmful misinformation policy in its Community Standards that may lead to content removal when there is Falsity + Harm.

It is worth noting that Harm is such a strong factor that platforms are generally comfortable removing Harmful content even where is no Falsity under their policies against incitement, hate speech etc.

I am not aware of there being material support anywhere for a pure Falsity model where all untrue content is removed even when it is harmless.

Suppression based on these two factors may then kick in where content is a) Harmful, or b) Harmful and False, but not when it is c) False but Harmless.

While these Falsity and Harm factors will not be new or surprising, the explicit recognition of Politics and Resilience as factors on the other side may be more contentious.

Politics is the extent to which something has become a partisan issue.

Resilience is an assessment of how resistant people are to particular forms of misinformation.

These two factors pull the balance back towards not acting in particular scenarios.

In crude terms, when misinformation becomes a partisan issue and/or when we judge that it is unlikely to change people’s views then there may be a stronger case against action.

Illustrating the Model

I can best flesh this out using some examples (with another reminder that I am trying to describe how I think people are making decisions today rather than arguing that these are necessarily the only good outcomes) .

If, after reading the examples, you find the model to be a reasonably accurate description of current reality then I will have achieved my first goal and we can move on to debating whether reality is good enough or needs upgrading.

Example 1 – ‘the earth is flat’. (As a proxy for similar ‘eccentric’ beliefs).

FALSITY – this is as demonstrably false as it gets.

HARM – there is no evidence that anyone is being harmed today by people sharing their belief that the earth is flat.

POLITICS – there may be the odd politician who holds this view privately but it is not a feature of partisan debate anywhere.

RESILIENCE – with hundreds of years of evidence contradicting the assertion people are well equipped to dismiss this out of hand.

DECISION – while Falsity is high, there is no Harm or Political angle, and we are so Resilient to this idea that there is no need to make any intervention.

There does not seem to be any pressure for platforms to deal with false claims in this oddball bucket.

Where Resilience to a claim reaches a certain level we might even argue that it is not misinformation at all and ignore it.

Example 2 – anti-vaccination.

FALSITY – levels of falsity can vary significantly in the anti-vaccination arena. Content may be deemed misleading because it selectively quotes from otherwise genuine scientific publications. Or it might involve outrageous conspiracy theories making unfounded allegations about public figures. These different weights mean there may be greater impetus to act against some claims than others rather than applying a single treatment to all anti-vaccination content.

HARM – this will vary across communities supporting stronger intervention where there is a clear and immediate health risk than in places where the harm is less clear. There will often be very good data to inform this judgement as vaccination and illness rates are closely monitored.

POLITICS – in most countries there is a consensus between politicians that people should participate in vaccination programs. But this has become a partisan issue in some places. Where political support for anti-vaccination claims is from the fringes then this may not materially affect the balance, but where it is mainstream then this will be a factor.

RESILIENCE – many people do understand the importance of vaccination and are resilient at least to the wilder conspiracy theories. But levels of resilience vary between countries and they can drop quite rapidly even where previously high in the face of scary novel claims especially where these have influencer support.

DECISION – With vaccination, the balance may land in different places in different countries. Where local data shows serious health problems because of the low take-up of vaccination then there is likely to be a compelling case for intervention even if partisan politics is involved. The case will be even stronger if there is low Resilience in the population because baseline information is either lacking or false.

Where there is significant Harm being caused by demonstrably False information in a community and there is Political consensus on the issue then there is a strong case for intervention.

If there is significant political support for the contested claims then this may become more finely balanced and the levels of Falsity and community Resilience will be important factors.

Example 3 – Covid-19.

FALSITY – there is a range of levels of falsity as with anti-vaccination. Some claims like 5G causing Covid-19 are as demonstrably false as anything can be. Other claims may relate to issues which have not been sufficiently tested for falsity to be absolute.

HARM – the Harm factor weighs very heavily when considering claims about the response to Covid-19. There is a clear and present danger of serious illness and death at massive scale.

POLITICS – in most countries there is a very high degree of political consensus about the facts of the disease and what needs to be done to combat it. Disagreements over whether specific measures strike the right balance between economic and health considerations are quite common but these are generally based on an agreed common set of facts. In some countries, there has been partisan political support for potentially misleading claims about health responses.

RESILIENCE – there is very low Resilience to Covid-19 misinformation because of the novelty of the disease and the appetite people have for solutions.

DECISION – The balance tilts strongly in favour of intervention because of the high level of Harm and low levels of Resilience or partisan Political dispute. There may be a case for intervention even where levels of Falsity are low. In those countries where there is some partisan Political support for disputed claims the balance may still land on the side of intervention.

When we compare anti-vaccination and Covid-19 in this model, this helps to explain why I said at the outset that I believe we may see more intervention against speech by politicians but that there remain important constraints.

With Covid-19, the factors stack up in favour of intervention even against a leading politician because of the low levels of Resilience in the community and the high levels of Harm.

These same conditions will apply in some cases with anti-vaccination if there is a serious outbreak of disease and there is a poor baseline of accurate information in the community.

In other cases where disease is under control as vaccination rates remain high, even if not optimal, and there is a good baseline of information in the community the balance may tip in favour of lighter intervention when this becomes a political issue.

I will set out a final example and then come back to why I think this balance, however uncomfortable, is an accurate reflection of how interventions will be calibrated if avoidance of partisanship remains a goal.

Example 4 – the claim in the 2016 UK Referendum that leaving the EU would save “£350 million per week”. (As a proxy for a host of other claims during election campaigns).

FALSITY – the data shows that the real figure for UK contributions to the EU was £250 million per week. The campaign used a gross contribution number that failed to take into account a rebate mechanism. I may lose some friends with this judgement but I would class this as at worst a medium-weight Falsity. Large amounts of money were being paid to the EU and this number does appear in the accounts – it is a truth but not the whole truth.

HARM – I may again lose friends here but would argue that there is a low weight of Harm in situations where the ‘bad’ outcome is that the other side wins in an election. We should be careful not to equate a partisan view that a particular election outcome is harmful with misinformation causing Harm in and of itself. Disruption of the democratic process and promotion of violence are the direct Harms that carry most weight at election time rather than misleading information in otherwise legitimate campaign material.

POLITICS – this claim carried huge political weight as it was promoted by a major player in a very heated election period. The fact that it came from senior figures in the official campaign is material. It gains extra weight in terms of this equation because it was a focus of the campaign and reactions to it were very highly partisan.

RESILIENCE – if I haven’t lost enough friends already, then my judgement that people were actually quite resilient to this claim may finish that off. We expect exaggerated claims at election time and they are often widely debated as this one was at the time. This claim was endowed with extra weight when the unexpected side won but the selective use of big money numbers is part and parcel of most election campaigns. People will have reacted to it according to their existing partisan views rather than being strongly influenced by exposure to it.

DECISION – The balance tilts against intervention here as heavy Politics and Resilience outweigh lighter Falsity and Harm.

The arguments I have made for the balance being against intervention here are ones that could be made for many claims by election campaigns.

These are often challenged and found to display varying degrees of Falsity.

I am describing a position in which there would rarely be intervention in relation to campaign material under normal election conditions.

I believe that this correctly represents the status quo for where platforms would like to be but this has become a controversial stance.

This is not a blanket exemption as the balance might tip in particular circumstances, for example where community Resilience is low because democratic traditions are weak, or where a campaign is intent on Harms that go beyond the normal range of election outcomes.

The balance also shifts towards intervention if there is a risk of direct Harms like violence and the corruption of the electoral process.

We should expect intervention against even leading politicians if they call for violence or publish misleading information about when and where to vote under this model.

There may be different judgement about when a certain form of speech constitutes incitement or is intended to steer people away from the polls but such claims will tilt the balance towards intervention in some degree.

Partisan Truths

I have just described a model under which most political campaign claims will not lead to significant intervention, and where political support for alleged misinformation may make it less not more likely that platforms intervene.

Yikes.

This will certainly not be a satisfactory position if you consider that platforms should only weigh the Harm and Falsity factors in their decision-making.

You may rather feel that we should give no weight to politicians when they are on the wrong side of the facts.

And you may see introducing a community resilience factor as a rationale for less intervention as dodging responsibility.

Even if you accept the Political factor is important, then you may think it needs to be on the other side of the equation as there is more urgency to act when a politician, especially a leading one, promotes misinformation.

This is where the avoidance of partisanship becomes such a constraint.

The suppression of speech that is predominantly being made by one party in a contested space is an unavoidably partisan action.

If one party takes up an issue like anti-vaccination as part of its platform and then has their content systematically downgraded as compared with that of other parties then the effect will be one of partisan bias.

There is no way to avoid this effect even if the intention is otherwise.

There are circumstances where the argument to proceed is compelling irrespective of the partisan impact.

The recent actions we have seen against President Bolsonaro’s content fall into this category and I would expect there to be more cases where politicians’ speech is removed as Covid-19 proceeds.

When this happens, the politician or their allies will claim these are partisan interventions.

The instinctive response from platforms will be to deny this and they will refer to their rationale as being one of harm prevention.

This can result in an unsatisfactory argument pitching effect – which is partisan when one side’s content is removed – against intent – which platform decision-makers believe strongly was non-partisan.

We may have better debates if we recognise that both things are true for some of the most sensitive decisions.

An intervention can be both motivated by non-partisan considerations and have a significant partisan effect.

The avoidance of a partisan effect is a factor in decision-making but does not create a veto and in some cases, as with the recent claims by the Brazilian President, an exception is being made.

I expect there to be more such interventions both around Covid-19 and in other perhaps even more sensitive policy areas.

When they happen we will have a better debate if those who support them and those who oppose them can recognise all factors in the decision-making.

This is why I wanted to set out a model for this that I believe does match up quite closely with how decisions are made today.

Next Steps

If you accept that this is a reasonably accurate description of the status quo then that leads us on to questions about whether it is ‘right’ for platforms to place so much weight on not being partisan arbiters of truth.

As I think about this, I was struck last week by this chart in a report from the Reuters Institute for the Study of Journalism (as a further, I hope non-contentious, disclaimer, I will be a Visiting Fellow at the Institute for the rest of 2020).

If you click on the Left and Right tags at the top of the chart, you will see that trust in Government and News Organisations swings dramatically according to where people in the US sit on the political spectrum.

This is an interesting illustration of what happens when platforms that are in theory neutral – government in its governing rather than political capacity, and the news media as a balanced and necessary fourth estate – have become labelled as partisans.

There is a lot that is special about the current state of partisanship in the US,

There is also lot to unpack in relation to social media (which has its own low trust ratings in the survey) as it is where people see content from many parties who each have their own trust ratings – government, news media, experts, people you know, and people you don’t know.

I will explore this further in the follow-on post by looking at what might happen if platforms took a different view of partisan effects.

Summary :- an assessment of the factors that shape platform responses to misinformation. I look at the response to Covid-19 and move on to describe a model for the factors influencing the way platforms intervene more generally. Partisan political impacts are an especially complex consideration.