You may have noticed a recurrent theme in my posts is a plea for us to get detailed and specific when talking about online harms.

I described a model for Harm Reduction Plans as a way to structure conversations between platforms, regulators and the public around harms and the measures that online services are taking to respond to them.

I used the example of a harm reduction plan related to child abuse images to illustrate how I thought such a plan might work.

A friend correctly responded to the post by observing that other harms can be much more complex to define as there is less consensus around them than there is for child abuse images which are prohibited in most countries.

In this post, I want to look at one of these other more complex issues, which is the treatment of images showing adult nudity.

As well as this piece, you might want to listen to a conversation about nudity policy that Nicklas Berild Lundblad and I recorded as a regulate.tech podcast episode.

Which brings me to the funky title of the post, which is not some kind of ‘interesting’ Christmas present wish list but a reflection of the level of detail that you have to get into when adopting what may initially seem to be a clear and simple policy :-

“We restrict the display of nudity or sexual activity because some people in our community may be sensitive to this type of content.”

Facebook Community Standards

Having decided to ban adult nudity, you will then have to decide whether any particular image that is reported to you is nudity or not, and this means considering questions like these :-

- exactly how much material in a fishnet top (this may be a function of both net density and positioning) has to cover a woman’s nipple for it to be regarded as clothed and therefore allowable in an image?

- should a man be considered to be naked or clothed if he is otherwise nude in a photo but has artfully placed a sock over his genitals, and does it matter whether the sock covers ‘everything’?

And, yes, there are people working for the platforms whose job it is to look at lots of examples of these kinds of borderline images to decide where the permit/prohibit line should be set for content reviewers and algorithms.

But, no, we should not think this is an altogether ‘cushy number’ as these same people have to spend most of their time making decisions about much more disturbing content – I hope we might allow them a few moments of light relief when questions like these are on the agenda.

So, if a policy banning nudity like this is going to be hard to define and implement then why would a platform do it?

No Nudes For Prudes

A common reason that I heard cited when I was working for a US-based platform was that this was some kind of reflection of US cultural attitudes towards nudity that are seen to be quite prudish.

This sniffiness about US attitudes itself reflects quite a narrow European perspective, and when we instead take a global perspective we find that the US is at the more permissive end of the spectrum, even if it can be more conservative than some European countries on broadcast material.

These assumptions about US attitudes blew up a few years ago after Facebook removed an image of an artwork shared by someone in France.

The artwork is a painting called ‘L’Origine Du Monde’ by Gustave Courbet and its removal led to a long-running dispute in the French courts.

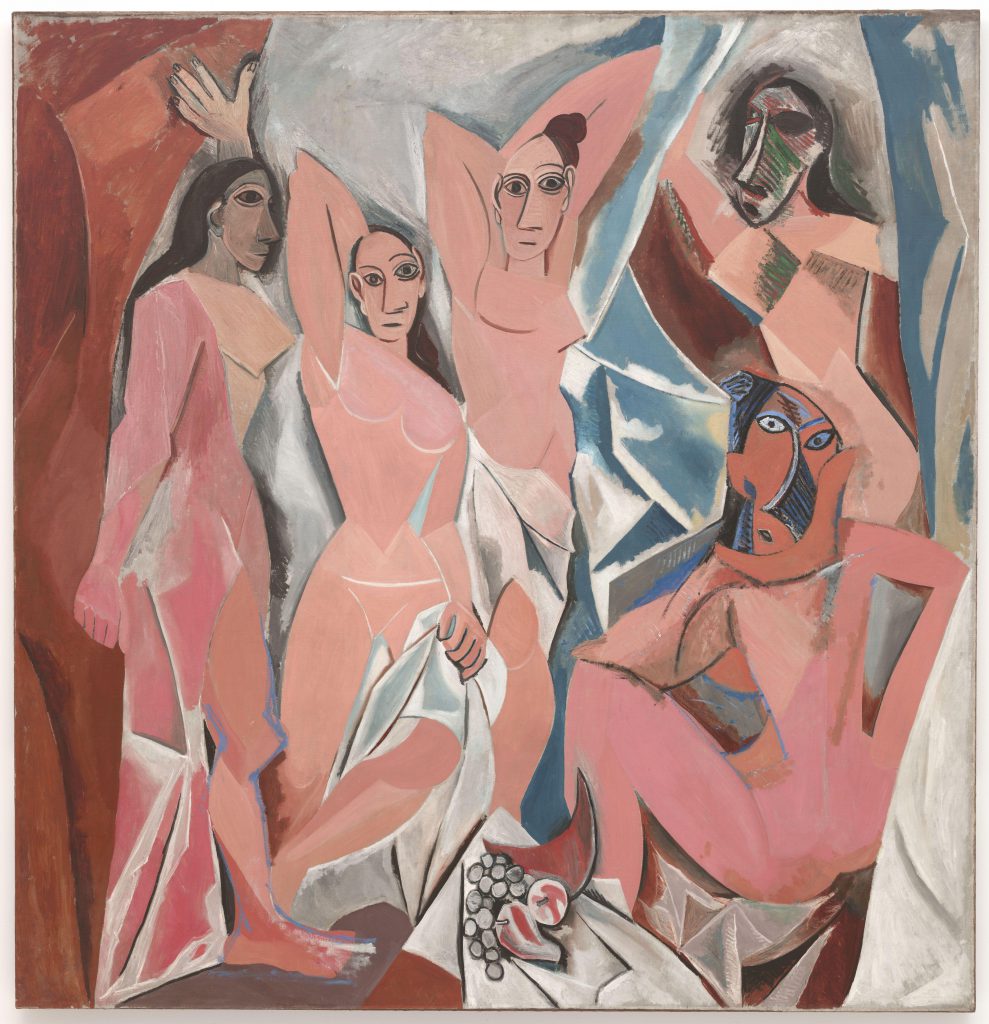

The image had actually been taken down in error as Facebook does not prohibit images of paintings that contain nudes but the reviewer had clearly failed to recognise that a particular image (which is quite realistic unlike this Picasso) was in fact a painting rather than a photo.

If a reviewer did not recognise that this other photo above was a work of art then I think we might all feel it is fair to criticise them, but if you take a look at L’Origine Du Monde for yourself you may feel the confusion was more understandable.

I will not go into the ins and outs of the whole legal saga but rather use this to illustrate both the challenge for reviewers in making decisions about nudity and the sensitivities around getting these decisions wrong.

My experience of the people working at US platforms is that they are anything but prudish in their attitudes and it is more helpful to look elsewhere for why they have concluded that they should prohibit material on the platforms they run which they may not find personally objectionable.

If Not Prudes, Then What’s The Harm?

When it comes to general prohibitions on adult nudity then we are not necessarily talking about this content causing harm at all, and this is the first key point if we are to get more precise in our Harm Reduction Plans.

A broad prohibition on portraying adult nudity may both cause harm, in that it is over-restrictive of legitimate forms of expression, and prevent other harms that might occur if the reverse policy applied and there were broad permission to share this material.

The primary rationale for a platform wanting to ban nudity may have much more to do with how people use the service than be strictly related to harm prevention.

For a social media service that presents users with a stream of stories, we can see that users may feel uncomfortable with content containing nudity, especially where sexualised, appearing next to family and friends content.

We sometimes talk about content as NSFW – Not Safe For Work – and we might think of social media services as being concerned that content in people’s newsfeeds is not NSFFF – Not Safe For Family and Friends.

This does not mean this is the right standard for other platforms, and indeed even within the Facebook family there do seem to be different expectations with users of Instagram where feeds may be less family-oriented.

Restriction Harms

Harm Reduction Plans should recognise where content restrictions that a platform is imposing are likely to cause new, unintended, harms.

With bans on adult nudity, there is a general harm to expression if people cannot share images that they find acceptable and have no reason to expect will cause harm to others.

Within those broad limitations, there may be specific situations where we would see the harm as being especially significant.

In the context of nudity, the ability to share information about breast-feeding and women’s health have come up as important where content of this type has been restricted for showing naked female breasts in contravention of platform policies.

The Facebook External Oversight Board has produced an interesting opinion on just this subject in its recent set of decisions, supporting the idea that images related to breast cancer should be carved out of the general prohibition on nudity.

Nudity may also feature in images of important events, for example as a tool used by political protesters to draw attention to a cause, and this may be another area that platforms will wish to exempt from any general prohibition.

This dynamic of developing a list of exemptions provides some mitigation of the harms to freedom of expression that will result from any broad prohibition adopted by a platform.

We should now look at the harms prevented by a broad ban to understand whether a broadly permissive approach, with specific limitations, might be a viable alternative.

Permission Harms

Unlike some other types of content, adult nudity is not always and necessarily harmful – some images can be very harmful, eg so-called ‘revenge porn’, but others may be entirely benign, eg someone from a liberal culture happily sharing a photo of their bits on a beach.

If we imagine that a platform wanted to be as permissive as possible then this will help us to understand what they would need to put in place to separate harmful nudity, that they would prohibit, from benign nudity, that they would permit.

There are three big challenges to consider – consent, age and audience.

A platform would first need to take steps to ensure, with a high level of confidence, that any people appearing in nude images have consented to this content appearing.

The consequences of getting this wrong and allowing the free circulation of non-consensual nude images might range from mild embarrassment in some cultures through to catastrophic reputational damage and even threats to life in other contexts.

This is a particular risk for social media platforms which are designed to help content reach people who know each other personally and professionally – a fact that has not been lost on people intent on sexual blackmail.

These questions of consent have recently been raised in the context of pornography websites who are under pressure to tighten up their verification.

They have been less material for some of the large platforms precisely because they have broad nudity restrictions in place, but any move towards a more permissive approach would put them front and centre.

If a platform were able to implement a robust consent-verification mechanism, then the next challenge they would face would be to ensure that any people represented in the images are not minors.

There would be very serious consequences for both any users distributing the images and the platforms themselves if these turned out to be showing under-age subjects.

This would be an especially difficult challenge for younger users with a platform needing to assure itself, for example, that an image is of a nineteen year-old rather than a fifteen year-old for this to be permitted.

These two factors on their own – consent and age verification – might be sufficient for a platform to decide that only a blanket prohibition is workable, but they might find a way through so that there is corpus of images that they are confident they could allow to be circulated.

This brings us to the third major consideration, which is that of how audiences would experience images of nudity on a particular platform.

In most countries, there are expectations that minors will be exposed to different content from adults, so it is likely that any newly permitted images would have to remain prohibited for this cohort.

There are many countries in the world where images of nudity are culturally unacceptable, and may also be illegal, so there would be a need to control geographical distribution to respect these sensitivities.

It is also likely that people would feel uncomfortable about these images appearing in their feeds when they are viewing content in a public place so there would likely be user demand for a filter that would make sure they are not shown.

Back to A Blanket Ban?

Each of the harms I described in the last section – non-consensual sharing, under-age images, and exposing people to nudity inappropriately – might be sufficient on its own to make a platform very wary of this type of content.

It would be hard for a platform to stand up the argument that it was meeting its ‘duty of care’ to people if it had not taken significant steps to address each of these potential harms.

A blanket ban approach is a very robust response as it should, if properly implemented, prevent all three harms happening at any kind of scale.

The price paid for this in terms of limitations on speech can be reduced to some extent by carve-outs for specific forms of nudity, but this will still mean a lot of otherwise legal and benign speech may be restricted.

We can expect people to keep challenging blanket bans with examples of nudity that are not harmful, but it is hard to see how major platforms can become much more permissive and also be confident they are not opening the door to an unacceptable level of harm.

As we debate platform content policies more generally, there are often concerns that if major platforms restrict speech then this is a serious limitation on all forms of public discourse.

These are valid concerns but I worry that we can go too far in equating large platforms with the internet as a whole.

Nudity policy provides us an interesting example where many major platforms have prohibited the same class of content, but this has not meant it does not exist as it patently has plenty of other homes on the internet.

Given this content mostly exists away from the major platforms, the most important rules governing the kinds of nudity content we can access are not platform policies but whatever the law is in our country of residence.

Of course, there are different concerns when it comes to more political types of content like hate speech but this is a reminder of the importance of the law, which defines what we can access anywhere on the internet, vs platform policies that only set the limits for specific (albeit large) sites.